I’m sure there are a ton of people out there that have had this scenario.

A system fails. Locate the issue. A rush to repair or replace. Update while it’s out of service. Patch related systems and drivers. Add some buttons and bells. Turn it on. Hope for the best. Chase issues. Fix issues. Hope for the best. Track dependencies. Fix issues. Hope for the best. Fix issues. Prey it works. Fix issues. Hope for the best.

This, to me, represents the path of a lot of technical staff when backs are against the wall. It happens to all of us especially when dealing with production systems in a fluid corporate environment. Management seldom knows, or cares, what it takes to resolve most IT issues. They only see down time and dollars. They seldom approve test and development of new or updated systems because they only see things from a cost and man hour view point. It’s their job. Pencils and pennies.

I came from both sides of this coin. Pun intended. I’ve worked in small business when there simply wasn’t any money for testing new systems and also in very large corporate environment where change is seen as the root evil of the balance sheet. When you work in a department where the bean counters list you as a major red blotch in the bottom line it’s very difficult to justify updates/upgrades let alone a test environment to figure things out before you throw the switch on a whim idea some marketing knuckle head sold to their boss.

I’ve been fortunate, in most cases, to have some testing time before production. I’ve always padded my schedule and budget to allow for it. I did say ‘most cases’.

I recently built a new system for my in house network. It was a file server to replace three other storage devices. I was able to create virtual instances of each of the applications I was considering prior to choosing one for production and also prior to the actual build itself. I researched and destroyed the virtual applications while testing multiple times. I made mistakes and reloaded applications more often than I’ll admit here. I then used everything I learned screwing things up to build what I wanted in a production package.

Here’s the point, There is so much information available for free out there that the only real cost for testing and development has been reduced to time. I understand that in a firestorm, time is what you don’t have, however, when things are quite and systems are running as they should be you should be expanding your knowledge of how to make things better. Test beds and virtual environments are so easy to package these days at such a minimal cost they are easy to justify to the bean counters as preventative maintenance.

My flow normally follows the path of idea, virtual environment, AWS or Digital Ocean configurations then proposal and funding for production hardware. I can have multiple systems packaged over dozens of virtual machines in any combination for concept, testing and approval. I then will have a proven package in a working environment prior to ever putting together a proposal and I can present it in a functional product with confidence of proof of concept. If they say no I delete it. I has only cost me time and brain cells. No RMA’s required.

We woke up to a rumbling of thunder and lightning one early morning and in a blink of an eye a huge bolt of lightning hit near by and the house went dark for a couple seconds. I was instantly awake and listened patiently for each of my systems to begin their shutdown cycle and send out notifications to my phone.

We woke up to a rumbling of thunder and lightning one early morning and in a blink of an eye a huge bolt of lightning hit near by and the house went dark for a couple seconds. I was instantly awake and listened patiently for each of my systems to begin their shutdown cycle and send out notifications to my phone. So even though the spike wreaked havoc on a UPS circuit board, killed a couple batteries and melted the filter stack to the point it was necessary to replace the UPS itself, the system actually did what it was supposed to do. My system was protected, fail-over worked correctly and the FreeNAS file server was isolated to protect itself. All the other systems came up and notifications went zipping to my phone as planned.

So even though the spike wreaked havoc on a UPS circuit board, killed a couple batteries and melted the filter stack to the point it was necessary to replace the UPS itself, the system actually did what it was supposed to do. My system was protected, fail-over worked correctly and the FreeNAS file server was isolated to protect itself. All the other systems came up and notifications went zipping to my phone as planned.

I use an old Dell machine to run my firewall. I built the Freenas system which has so many features and options I haven’t even touched the surface of it yet. What am I going to do with this?

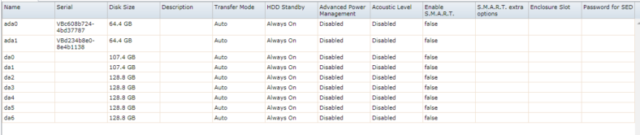

I use an old Dell machine to run my firewall. I built the Freenas system which has so many features and options I haven’t even touched the surface of it yet. What am I going to do with this? You can see them in the lower right corner of the picture. The configuration I am creating has the need for fourteen SATA ports. Doing the research for the correct controller card I found through the FreeNAS forums the model of card that was most widely used and proven to work in the configuration of system I am building.

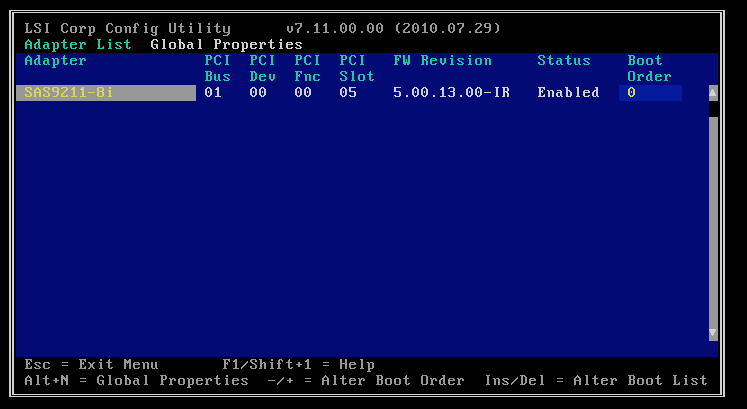

You can see them in the lower right corner of the picture. The configuration I am creating has the need for fourteen SATA ports. Doing the research for the correct controller card I found through the FreeNAS forums the model of card that was most widely used and proven to work in the configuration of system I am building. I purchased the card from Amazon however they can be found used, all over the internet and can be purchased in either IT or IR modes. For my application I was after a card in IT Mode since I didn’t require the hardware to control my volume, software was going to handle it. I did however want to make sure I used the latest firmware available so I didn’t care which mode it was in since I was going to upgrade it anyway. Once I was upgrading the firmware I could easily switch it to IT Mode.

I purchased the card from Amazon however they can be found used, all over the internet and can be purchased in either IT or IR modes. For my application I was after a card in IT Mode since I didn’t require the hardware to control my volume, software was going to handle it. I did however want to make sure I used the latest firmware available so I didn’t care which mode it was in since I was going to upgrade it anyway. Once I was upgrading the firmware I could easily switch it to IT Mode.

Recent Comments